Hive File Formats

Hive supports several file formats:

- Text File

- SequenceFile

- RCFile

- Avro Files

- ORC Files

- Parquet

- Custom INPUTFORMAT and OUTPUTFORMAT

The hive.default.fileformat configuration parameter which is avaialble in hive-site.xml determines the format to use if it is not specified in a CREATE TABLE or ALTER TABLE statement.

Text file is the parameter's default value.

What is File Format ?

- File Format is a way in which information is stored or encoded in a computer file.

- In Hive it refers to how records are stored inside the file.

- These file formats mainly vary between data encoding, compression rate, usage of space and disk I/O.

- Hive does not verify whether the data that you are loading matches the schema for the table or not. However, it verifies if the file format matches the table definition or not.

Text File

- TEXT FILE format is a famous input/output format used in Hadoop.

- In Hive if we define a table as TEXTFILE it can load data of from csv (Comma Separated Values), tsv, txt, dat, lst, json files. And those formats can be delimited by comma, tab, Space.

- This means fields in each record should be separated by comma or space or tab or it may be JSON(JavaScript Object Notation) data.

- By default, if we use TEXTFILE format then each line is considered as a record.

CREATE TABLE olympic(

athelete STRING

, age INT

, country STRING

, year STRING

, closing STRING

, sport STRINGt

, gold INT

, silver INT

, bronze INT

, total INT)

row format delimited fields terminated by '\t';

- At the end, we need to specify the type of file format. If we do not specify anything it will consider the file format as TEXTFILE format.

- Here we are creating a table with name "olympic". The data inside the above input file is delimited by tab space.

- We can load this olympic_data into the created table as follows,

load data local inpath 'local path of your file' into table olympic;

SELECT * FROM olympic;

Sequence File

- We know that Hadoop's performance is drawn out when we work with a small number of files with big size rather than a large number of files with small size.

- If the size of a file is smaller than the typical block size in Hadoop, we consider it as a small file. Due to this, a number of metadata increases which will become an overhead to the NameNode. To solve this problem sequence files are introduced in Hadoop. Sequence files act as a container to store the small files.

- Sequence files are flat files consisting of binary key-value pairs.

- Sequence files are stores values in binary key-value pairs, which can be split and the main use of these files is to club two or more smaller files and make them as one sequence file.

- In Hive we can create a sequence file by specifying STORED AS SEQUENCEFILE in the end of a CREATE TABLE statement.

- There are three types of sequence files:

- Uncompressed key/value records.

- Record compressed key/value records - only 'values' are compressed here

- Block compressed key/value records - both keys and values are collected in 'blocks' separately and compressed.

- Hive has its own SEQUENCEFILE reader and SEQUENCEFILE writer libraries for reading and writing through sequence files.

- STORED AS SEQUENCEFILE means Stored as compressed Sequence File.

CREATE TABLE olympic_sequencefile

( athelete STRING

, age INT

, country STRING

, year STRING

, closing STRING

, sport STRINGt

, gold INT

, silver INT

, bronze INT

, total INT)

row format delimited fields terminated by '\t'

stored as sequencefile;

- Here we are creating a table with name "olympic_sequencefile". The data inside the above input file is delimited by tab space.

Loading data into Sequence File format table,

- Now to load data into this table is somewhat different from TEXTFILE format.

- You need to insert the data from another table because this SEQUENCEFILE format is the binary format.

- It compresses the data and then stores it into the table.

- If you want to load directly as in TEXTFILE format that is not possible because we cannot insert the compressed files into tables.

INSERT OVERWRITE TABLE olympic_sequencefile SELECT * FROM olympic;

SELECT * FROM olympic_sequencefile ;

RCFILE Format

- RCFILE stands of Record Columnar File which is another type of binary file format which offers high compression rate on the top of the rows.

- RCFILE is used when we want to perform operations on multiple rows (millions of rows) at a time.

- RCFILEs are flat files consisting of binary key/value pairs, which shares many similarities with SEQUENCEFILE.

- RCFILE stores columns of a table in form of record in a columnar manner.

- It first partitions rows horizontally into row splits and then it vertically partitions each row split in a columnar way.

- RCFILE first stores the metadata of a row split, as the key part of a record, and all the data of a row split as the value part.

- This means that RCFILE encourages column oriented storage rather than row oriented storage. This column oriented storage is very useful while performing analytics.

- This column oriented storage is very useful while performing analytics. It is easy to perform analytics when we “hive’ a column oriented storage type.

- Facebook uses RCFILE as its default file format for storing of data in their data warehouse as they perform different types of analytics using Hive.

- RCFile, columns are tab separated and rows are newline separated.

- ORC files are primarily used by Hive and Pig.

- Available in Hive 0.6.0 and later.

- Large Blocks - 4MB default

( athelete STRING

, age INT

, country STRING

, year STRING

, closing STRING

, sport STRINGt

, gold INT

, silver INT

, bronze INT

, total INT)

row format delimited fields terminated by '\t'

stored as rcfile;

- Here we are creating a table with name "olympic_rcfile". The data inside the above input file is delimited by tab space.

Loading data into RCFILE File format table,

- We cannot load data into RCFILE directly. First we need to load data into another table and then we need to overwrite it into our newly created RCFILE as shown below:

INSERT OVERWRITE TABLE olympic_rcfile SELECT * FROM olympic;

SELECT * FROM olympic_rcfile;

- Now we can perform the same basic SELECT operation which we have performed on the TEXTFILE format, on RCFILE format as well.

ORCFILE Format

- ORC stored the data in columnar format and the data will be in binary standard.

- ORC stands for Optimized Row Columnar which means it can store data in an optimized way than the other file formats.

- ORC reduces the size of the original data up to 75%(eg: 100GB file will become 25GB).

- As a result the speed of data processing also increases.

- ORC shows better performance than Text, Sequence and RC file formats.

- An ORC file contains rows data in groups called as Stripes along with a file footer.

- ORC format improves the performance when Hive is processing the data.

- Hive type support including datetime, decimal, and the complex types (struct, list, map, and union)

- ORC is Available in Hive 0.11.0 and later

- Larger Blocks - 256 MB by default, Has an index for block boundaries.

- While default is 64 MB, keep stripe size in between ¼ of block-size to 4 blocks-size (default ORC block size is 256 MB)

- Stripe size - (number of records, default 10K)

CREATE TABLE olympic_orcfile

( athelete STRING

, age INT

, country STRING

, year STRING

, closing STRING

, sport STRINGt

, gold INT

, silver INT

, bronze INT

, total INT)

row format delimited fields terminated by '\t'

stored as orcfile;

ORC File Structure,

- An ORC file contains groups of row data called stripes, along with auxiliary information in a file footer.

- The default stripe size is 250 MB. Large stripe sizes enable large, efficient reads from HDFS.

- At the end of the file a postscript holds compression parameters and the size of the compressed footer.

- The file footer contains a list of stripes in the file, the number of rows per stripe, and each column's data type. It also contains column-level aggregates count, min, max, and sum.

Stripe Structure,

- each stripe in an ORC file holds index data, row data, and a stripe footer.

- The stripe footer contains a directory of stream locations. Row data is used in table scans.

- Index data includes min and max values for each column and the row positions within each column.

Loading data into ORCFILE File format table,

- We cannot load data into ORCFILE directly. First we need to load data into another table and then we need to overwrite it into our newly created ORCFILE.

INSERT OVERWRITE TABLE olympic_orcfile SELECT * FROM olympic;

SELECT * FROM olympic_orcfile;

- Now let us perform the same basic SELECT operation which we have performed on the TEXTFILE format, on ORCFILE format as well.

Parquet Format

- It is an open source file format for Hadoop.

- Parquet stores nested data structures in a flat columnar format.

- Compared to a traditional approach where data is stored in row-oriented approach, parquet is more efficient in terms of storage and performance.

- Parquet is a column-oriented binary file format.

- The parquet is highly efficient for the types of large-scale queries. Parquet is especially good for queries scanning particular columns within a particular table.

- The Parquet table uses compression Snappy, gzip; currently Snappy by default.

- When we are processing Big data, cost required to store such data is more (Hadoop stores data redundantly I.e 3 copies of each file to achieve fault tolerance) along with the storage cost processing the data comes with CPU,Network IO, etc costs. As the data increases cost for processing and storage increases. Parquet is the choice of Big data as it serves both needs, efficient and performance in both storage and processing.

- Parquet is natively supported in a number of popular Hadoop frameworks: Pig, Impala, Hive, MR, Cascading.

- Available in Hive 0.13.0 and later

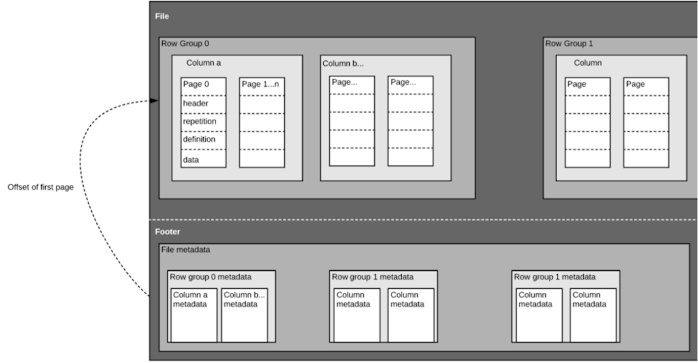

- Parquet file format consists of 2 parts – Data and Metadata.

Row group -> set of column values/data.

Footer -> we have metadata for separate row groups.

CREATE TABLE olympic_parquet

( athelete STRING

, age INT

, country STRING

, year STRING

, closing STRING

, sport STRINGt

, gold INT

, silver INT

, bronze INT

, total INT)

row format delimited fields terminated by '\t'

stored as parquet;

Loading data into Parquet File format table,

- We cannot load text file directly into parquet table , we should first create an alternate table to store the text file and use insert overwrite command to write the data in parquet format.

INSERT OVERWRITE TABLE olympic_parquet SELECT * FROM olympic;

SELECT * FROM olympic_parquet;

- In order to test performance, we should run the queries in Multi-node cluster, where jobs are parallelized and run simultaneously.

Avro File Format

- Avro is one of the preferred data serialization systems.

- It uses JSON for defining data types and protocols and serializes data in a compact binary format.

- Its primary use is in Apache Hadoop, where it can provide both a serialization format for persistent data, and a wire format for communication between Hadoop nodes, and from client programs (program written in any programming languages) to the Hadoop services.

- You can exchange data between Hadoop ecosystem and Avro is one of the popular file format in Big Data Hadoop based applications.

- This file format can be used in any of the Hadoop's tools like Pig and Hive.

- Using Avro, we can convert unstructured and semi-structured data into properly structured data using its schemas.

- Available in Hive 0.14.0 and later

- AVRO with Snappy and Deflate codecs has a strong compression of 92%.

Comments

Post a Comment